· 3 min read

Step-by-Step Guide to Running Stable Video Diffusion (SVD) on Linux

Stable Video Diffusion (SVD) is a video generation model, and this article provides a detailed guide on running SVD on Linux along with problem-solving tips.

Let’s explore how to use Stable Video Diffusion (SVD), a user-friendly video generation model released by Stability AI, based on stable diffusion of image models. It provides both the necessary models and open-source code, making it accessible for everyone to get started within 20 seconds.

Official Resources and Webpages for SVD

- GitHub Repository: Stability-AI/generative-models

- Hugging Face: stabilityai/stable-video-diffusion-img2vid-xt

- Research Paper: Scaling Latent Video Diffusion Models to Large Datasets

Installation Prerequisites

- GPU Memory Requirement: At least 16GB of GPU memory.

- RAM Requirement: Recommended 32GB of RAM or more.

Download Stable Video Diffusion Code

git clone https://github.com/Stability-AI/generative-models

cd generative-modelsDownload Official Pretrained Models for Stable Video Diffusion

- SVD: stabilityai/stable-video-diffusion-img2vid-xt

- SVD-XT: stabilityai/stable-video-diffusion-img2vid

There are four models available, and any of them can be used. They should be placed in the generative-models/checkpoints/ directory.

Model Differences:

- SVD: This model is trained to generate 14 frames of resolution 576x1024 based on the same-sized contextual frames.

- SVD-XT: Similar to the SVD architecture but fine-tuned for generating 25 frames of images. Note that it requires more GPU memory and RAM.

Python Environment Setup

It is recommended to create a Python environment using conda with Python 3.10 and install the SVD dependencies.

conda create --name svd python=3.10 -y

source activate svd

pip3 install -r requirements/pt2.txt

pip3 install .Running Stable Video Diffusion

Navigate to the SVD code directory and run SVD using Streamlit. You can customize the running parameters, such as --server.port and --server.address.

cd generative-models

streamlit run scripts/demo/video_sampling.py --server.address 0.0.0.0 --server.port 4801When running SVD, it will also download two models, models–laion–CLIP-ViT and ViT-L-14. You can manually download and place them in the following directories:

/root/.cache/huggingface/hub/models–laion–CLIP-ViT-H-14-laion2B-s32B-b79K

/root/.cache/clip/ViT-L-14.ptDownload Links:

When you see the following content in the terminal, it indicates that SVD has been successfully executed:

Using SVD

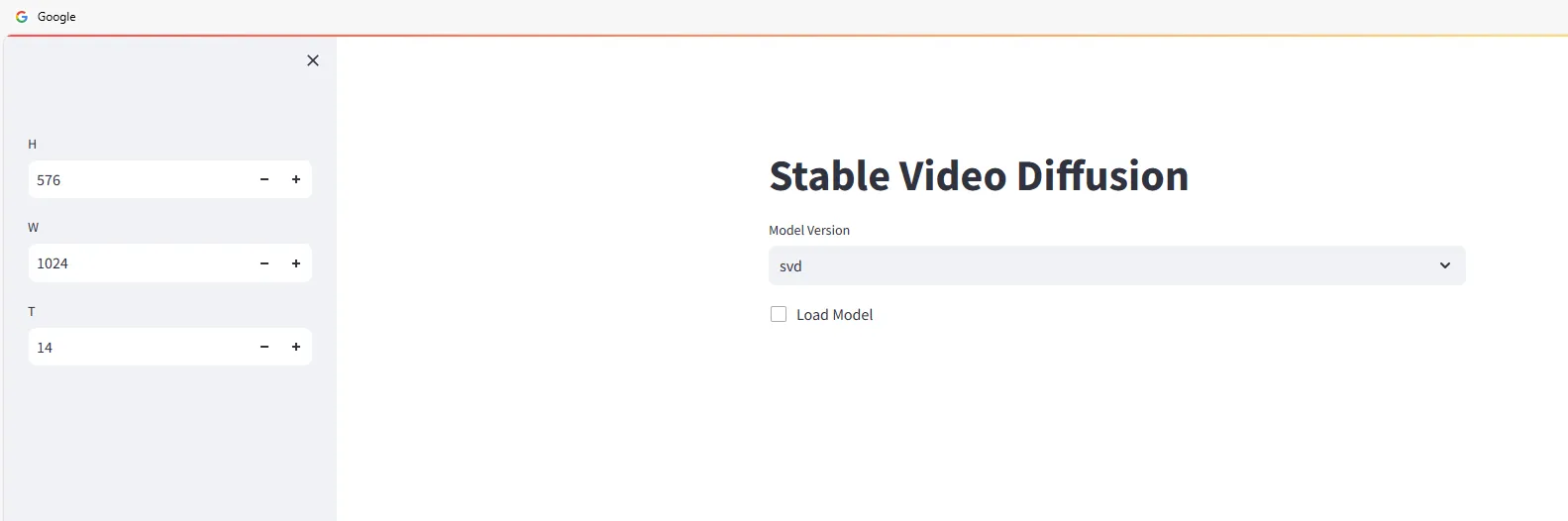

Open a web browser and go to http://<ip>:4801. You will see the following interface:

Start using Stable Video Diffusion to generate videos. Select the model version and check:

Drag and drop the images you want to generate videos from into the Input area, then click the Sample button and wait for the video generation.

The speed of generation depends on your machine’s configuration. For example, an RTX 3090 takes around 2-3 minutes, and the generated video will be automatically downloaded locally.

Explanation of Parameter Settings

- H (Height): Set the height of video frames in pixels. Default value is 576.

- W (Width): Set the width of video frames in pixels. Default value is 1024.

- T (Time or Frames): Set the number of frames to generate for the video. Default value is 14.

- Seed: Input a number to produce random but reproducible results. Default value is 23.

- Save images locally: Check this option to save the generated video frames locally.

Advanced Settings

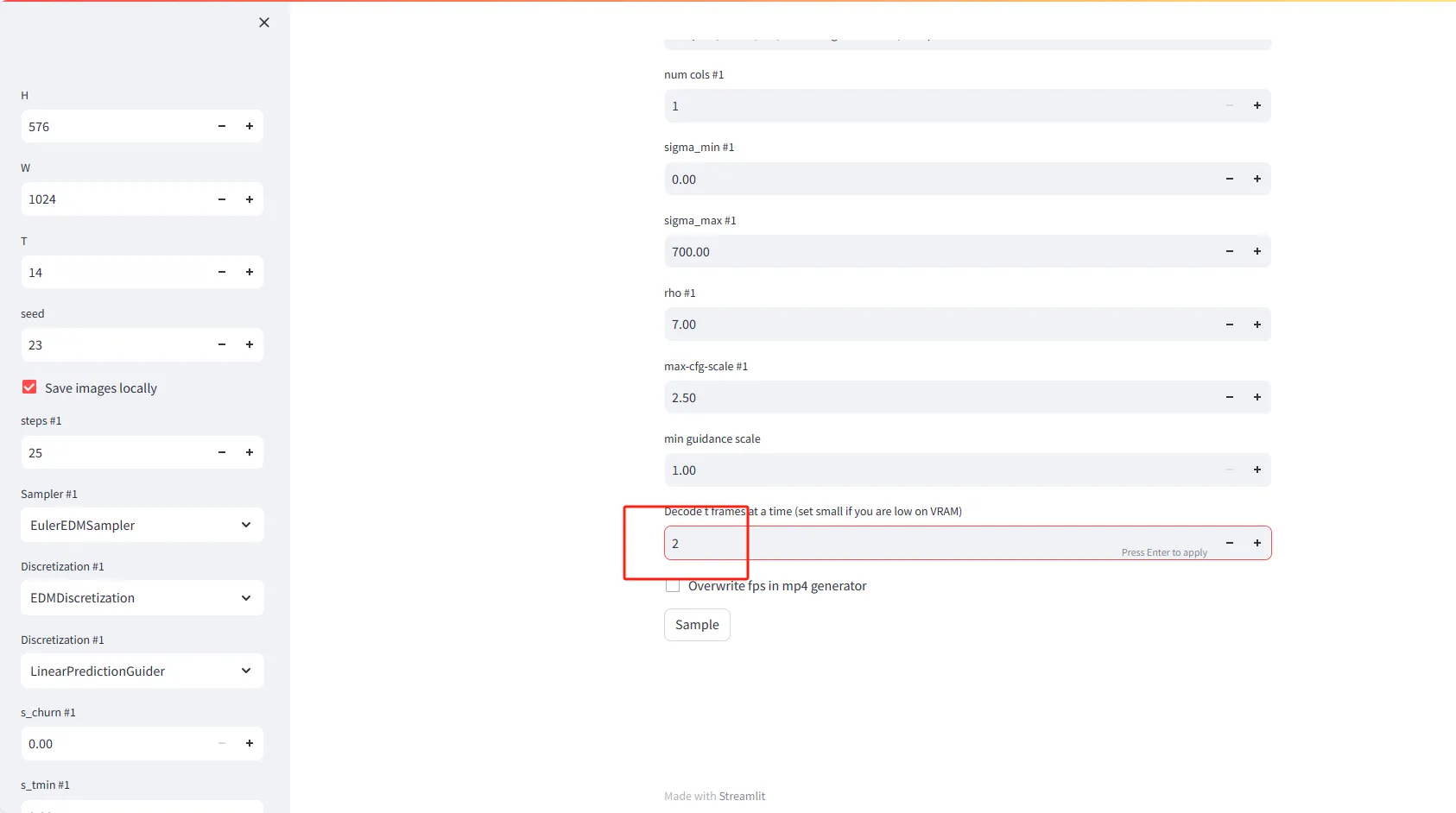

- Steps #1 (Iterations): Set the number of iterations required to generate each video frame. Default value is 25.

- Sampler #1: Choose a sampling algorithm to guide the generation of video frames. Default option is EulerEDMSampler.

- Discretization #1: Set the discretization strategy. Default is EDMDiscretization.

- Discretization #2: You can choose a second discretization strategy, such as LinearPredictionGuider.

Special Features

- Decode t frames at a time: Set the number of frames to decode at once in memory. If you have low VRAM (Video RAM), consider setting a smaller value, like 2.

- Overwrite fps in mp4 generator: Check this option to overwrite the frame rate setting when generating mp4 video files.

Common Issues with Stable Video Diffusion

No module named 'scripts'

>> from scripts.demo.streamlit_helpers import *

ModuleNotFoundError: No module named 'scripts'This error occurs because the generative-models directory needs to be added to the PYTHONPATH environment variable. You can do this as follows:

echo 'export PYTHONPATH=/generative-models:$PYTHONPATH' >> /root/.bashrc

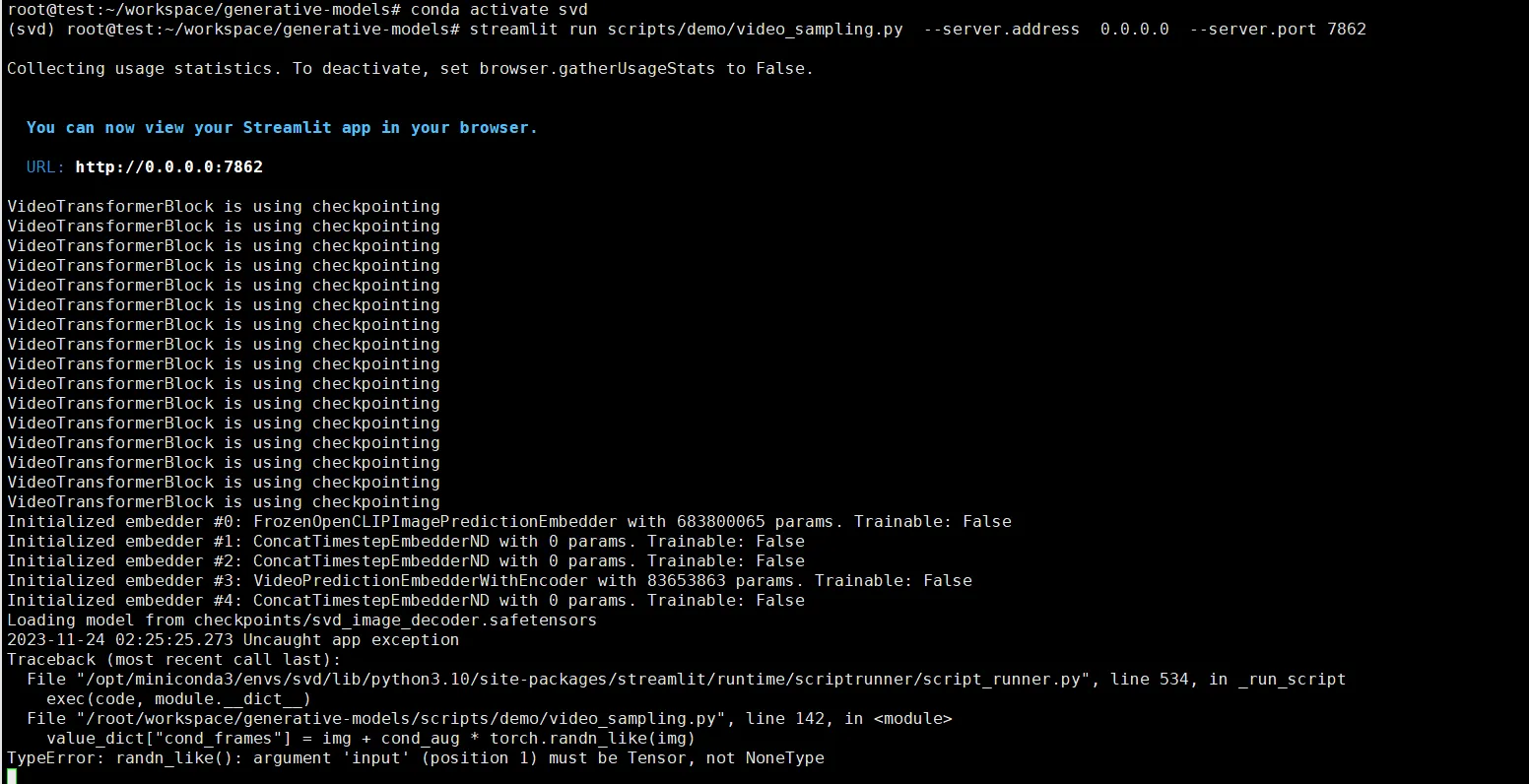

source /root/.bashrcTypeError: randn_like()

value_dict["cond_frames"] = img + cond_aug * torch.randn_like(img)

TypeError: randn_likeThis error is due to not selecting an image to generate from. You need to upload an image.